Data Lake vs Data Warehouse vs Lakehouse The Definitive, Practical Guide

Data architecture breakdown: when each model wins (and when it doesn’t)

Warehouses = governed BI at speed.

Lakes = any data at low cost, ML-friendly, governance is on you.

Lakehouses = ACID tables on object storage, unify BI + ML, but require engineering maturity.

Most orgs run hybrids; the trick is getting governance, cost, and interoperability right.

1) Architecture Deep-Dive (How they’re actually built)

Data Warehouse (DW)

Storage/Compute: Columnar, MPP engine (e.g., Snowflake, Redshift, BigQuery, Synapse SQL).

Modeling: Schema-on-write, Kimball/Dimensional (facts/dims) or 3NF.

Workloads: Ad-hoc BI, dashboards, scheduled reports, and some ELT.

Governance: Built-in RBAC, row/column masking, auditing.

Ops Focus: Cost predictability, data contracts, release mgmt for schema changes.

Where it shines: financials, regulated reporting, and KPI stores for exec dashboards.

Data Lake (DL)

Storage: Object storage (S3/ADLS/GCS), raw + curated zones (bronze/silver/gold).

Compute: Spark, Presto/Trino, Flink; query with Athena/BigQuery external tables/Synapse Serverless.

Modeling: Schema-on-read; late binding; consistent conventions > rigid schemas.

Workloads: ML training, feature engineering, log analytics, streaming ingestion.

Governance: You assemble it (Lake Formation/Purview/Ranger + table-format ACLs + catalogs).

Where it shines: variety + volume at low $; experimentation; future-proof archiving.

Lakehouse (LH)

Storage: Object storage + ACID table formats (Delta/Iceberg/Hudi).

Compute: Spark/Trino/engine of choice; BI direct-query via connectors.

Table Formats (core differences):

Delta Lake: Transaction log on files; strong Spark/Dbrx ecosystem; features like OPTIMIZE, Z-ORDER, time travel.

Apache Iceberg: Hidden partitioning, snapshot isolation, strong multi-engine support (Snowflake/BigQuery/Redshift/Trino all leaning in).

Apache Hudi: Built-in upserts, incremental pulls, strong CDC ingestion patterns.

Catalog: Unity Catalog/Glue/Hive/Polaris/Tabular.

Workloads: BI + ML + streaming on the same physical tables.

Governance: Table-level RBAC/ABAC, row/column masking, lineage via OpenLineage, Purview/UC.

Where it shines: one copy, many engines; fewer pipelines; time travel; CDC-friendly.

2) Performance Engineering: What actually makes things fast

File layout

Target 128–1024 MB parquet/orc file sizes (avoid tiny files).

Use partitioning for high-cardinality but commonly filtered columns (e.g.,

date,region).For Delta/Iceberg, leverage clustering/sorting (Delta’s Z-ORDER or Iceberg sort orders) to speed selective queries.

Schedule compaction (OPTIMIZE/REWRITE DATA FILES) and vacuum old snapshots.

Query patterns

Precompute gold aggregates for BI; don’t make BI tools scan bronze.

Use materialized views/Delta Live Tables/DBT for hot paths.

Cache dim tables in the engine memory for star joins.

Streaming

For lakehouse: exactly-once via transactional sinks (Delta/Iceberg).

Tune micro-batch intervals, checkpointing, and watermarking for late data.

3) Governance & Security (non-negotiables)

Identity & Access: Centralize via IAM/AAD; enforce least privilege at table/column/row level.

Data Classification: Tag PII/PHI; propagate tags to policies (masking/deny).

Lineage & Observability: OpenLineage/Marquez, Databricks lineage, Purview; tie alerts to SLA/SLOs.

Quality Gates: Great Expectations/Soda/Deequ in pipelines; fail fast on contract breaches.

Audit & Retention: Time travel + WORM buckets for compliance; implement legal hold processes.

Keys & Secrets: KMS/Key Vault/Cloud KMS + envelope encryption; rotate and monitor use.

4) Cost Model Reality Check

Warehouses: Pay per compute slot/credit; egress for external tables; watch for BI concurrency spikes.

Lakes/Lakehouses: Cheap storage, compute is the bill—optimize compaction, cache, and file pruning.

Universal tips:

Autosuspend/auto-scale; use serverless only where bursty.

Tag workloads by domain/cost center; publish monthly FinOps dashboards.

Kill zombie clusters/jobs; enforce TTL on dev sandboxes.

5) Interoperability (avoid lock-in)

Prefer open table formats (Delta/Iceberg/Hudi) + open file formats (Parquet/ORC).

Separate catalog (Glue/Polaris/UC) from compute engines to swap engines later.

Standardize contracts at the domain boundary (Avro/Protobuf/JsonSchema), publish in a registry.

6) Concrete Reference Architectures

AWS

Warehouse: Redshift (RA3) + Spectrum; or Snowflake on AWS.

Lake: S3 + Glue Data Catalog + Lake Formation + Athena/EMR/Trino.

Lakehouse: S3 + (Delta/Iceberg/Hudi) + EMR/Databricks/Trino + Redshift/Snowflake connectors.

Streaming: MSK/Kinesis → Spark/Flink → Delta/Iceberg.

Azure

Warehouse: Synapse Dedicated SQL / Snowflake.

Lake: ADLS Gen2 + Synapse Serverless + Spark Pools/Databricks + Purview.

Lakehouse: ADLS + Delta/Iceberg on Databricks/Spark + Direct Lake (Fabric) for BI; Power BI semantic model.

Streaming: Event Hubs/Kafka → Structured Streaming/Flink → Delta.

GCP

Warehouse: BigQuery native.

Lake: GCS + Dataproc/Trino + BigLake external tables.

Lakehouse: Iceberg/Delta on GCS + BigQuery/Dataproc/Trino with the same tables.

Streaming: Pub/Sub → Dataflow/Flink/Spark → Iceberg/Delta.

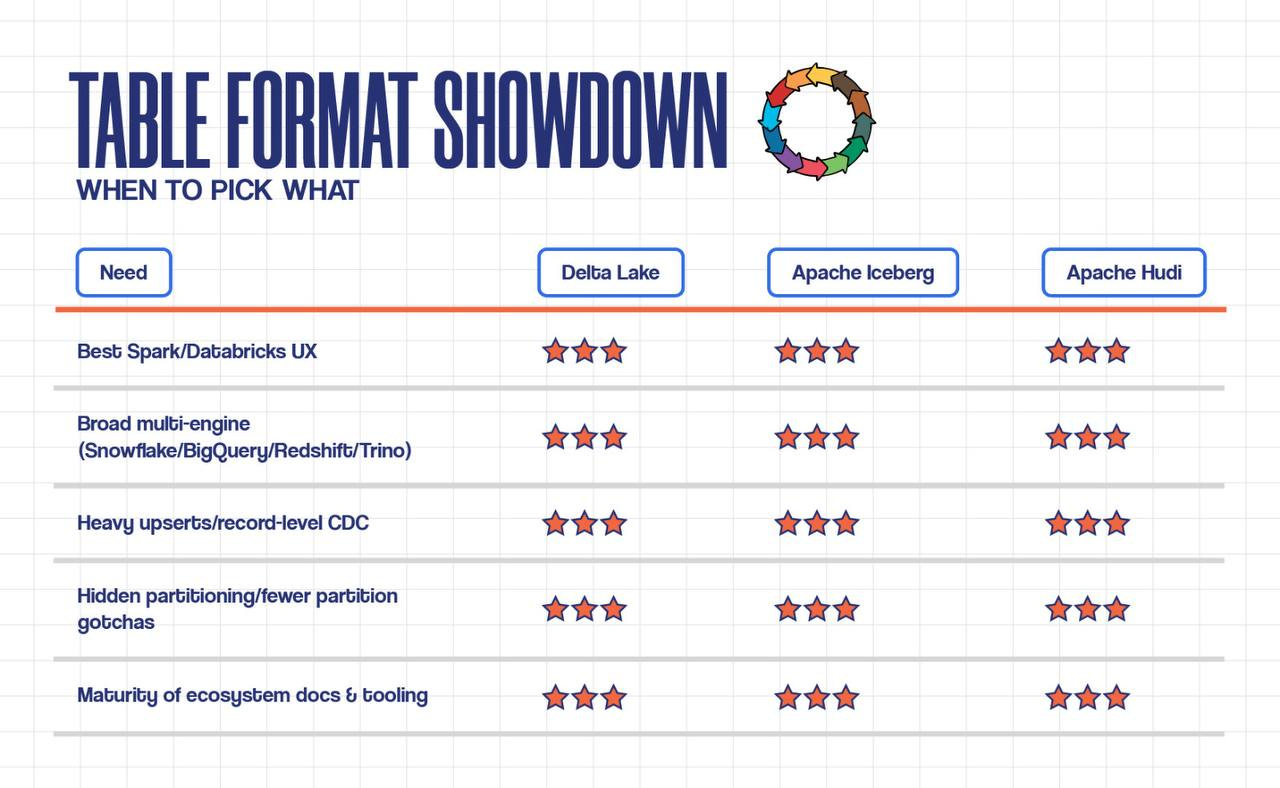

7) Table Format Showdown (when to pick what)

Pragmatic pick:

Delta if you’re Spark/Databricks-centric.

Iceberg if you need many engines today/tomorrow.

Hudi if upserts/incrementals are your core workload.

8) Migration Patterns (and traps)

Warehouse → Lakehouse

Land raw to bronze; build silver with cleansed schemas; publish gold marts.

Dual-run key dashboards; validate row counts & aggregates.

Gradually point BI to lakehouse tables; keep warehouse for critical finance early on.

Lake → Lakehouse

Introduce table format (Delta/Iceberg/Hudi) on silver/gold only.

Back-fill historical partitions; set compaction schedule.

Add governed access and a proper catalog before inviting BI.

CDC ingestion

Use Debezium/DMS → Kafka/MSK → Spark/Flink to transactional tables.

Choose Hudi (MERGE-ON-READ) or Delta/Iceberg with efficient MERGE INTO patterns.

Common anti-patterns

BI pointed at bronze.

Millions of tiny files.

No data ownership (mesh principles), everything centralized.

Ignoring deletes/GDPR in immutable logs.

Over-partitioning (e.g., by user_id) causing hot metadata and slow planning.

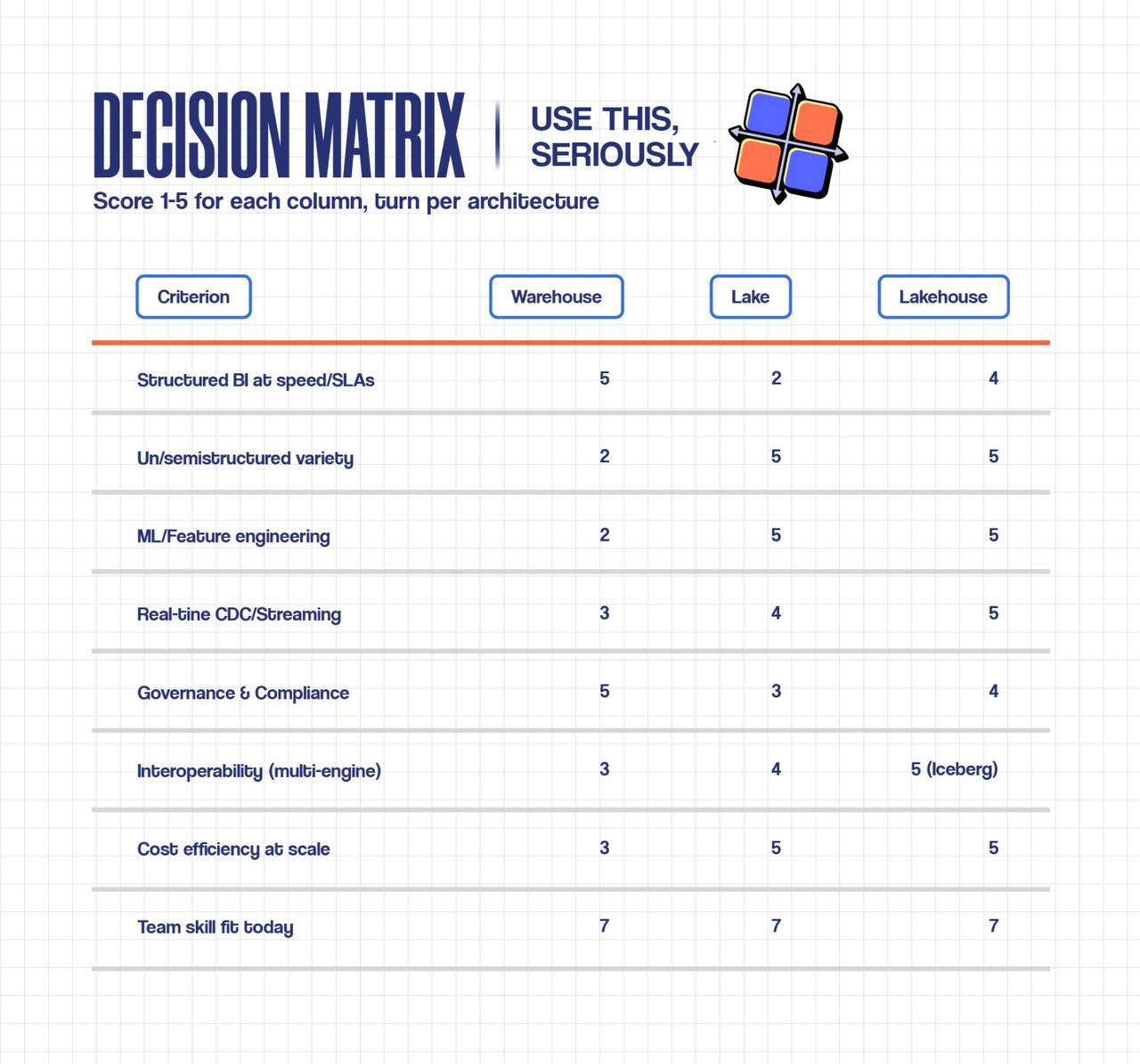

9) Decision Matrix (use this, seriously)

If Lakehouse wins but your team skill fit is low, phase in with a dual-run hybrid for 6–12 months.

10) Operating Model: who owns what (RACI sketch)

Domain Teams (Data Product Owners): own schemas, SLAs, quality rules (R/A).

Platform Team: storage, catalogs, security baselines, CI/CD for data (R).

Governance/Compliance: policies, audits, retention (A).

BI/Analytics: semantic layer, certified dashboards (R).

Security: KMS, key rotation, access reviews (A).

Publish a data product spec per domain: purpose, contracts, lineage, SLOs, PII tags, owners.

11) SLOs & KPIs to track

Freshness SLO: e.g., <10 min for gold tables X/Y.

Quality KPIs: failed validation rate <0.5%; drift alerts/week.

Cost/FTE: $/TB/month by domain; $/1000 queries.

Reliability: pipeline success rate; mean time to recovery.

Adoption: % BI queries served from lakehouse vs warehouse.

12) What to implement first (90-day plan)

Days 0–30

Pick table format + catalog; define bronze/silver/gold.

Stand up ingest (batch + one streaming source) and governance baseline (RBAC, masking).

Ship one business-critical gold table + a certified dashboard.

Days 31–60

Add CDC for one key system; implement compaction/OPTIMIZE/VACUUM.

Integrate quality checks (Great Expectations) in CI/CD.

Publish cost dashboard + tagging.

Days 61–90

Expand BI coverage (semantic layer/metrics store).

Onboard a second domain; introduce time-travel audits.

Run deprecation plan for legacy copies.

FAQ (you’ll get asked)

Can a warehouse do ML? You can feature-engineer in SQL, but training/inference at scale usually moves to lake/lakehouse.

One stack to rule them all? Not in large enterprises. Aim for interoperable stacks, not monoculture.

Which is cheapest? Storage favors lake/lakehouse. Total cost depends on query patterns and governance.

Bottom Line

If you need clean BI with tight SLAs and limited data types → Warehouse.

If you need any data, ML, and low storage cost → Lake (but add governance).

If you want one copy for BI + ML with ACID and open formats → Lakehouse (with the skills to run it).

At scale, expect a hybrid—design for open formats, catalogs, and governance from day one.

Next in this series: Data Mesh vs Data Fabric—when to decentralize, how to govern, and how not to blow up your platform.