eBPF + Cilium: Why Your Network Security is Broken (And How to Fix It)

Your Security is Slower Than Your Attacks

The Problem: Your Security is Slower Than Your Attacks

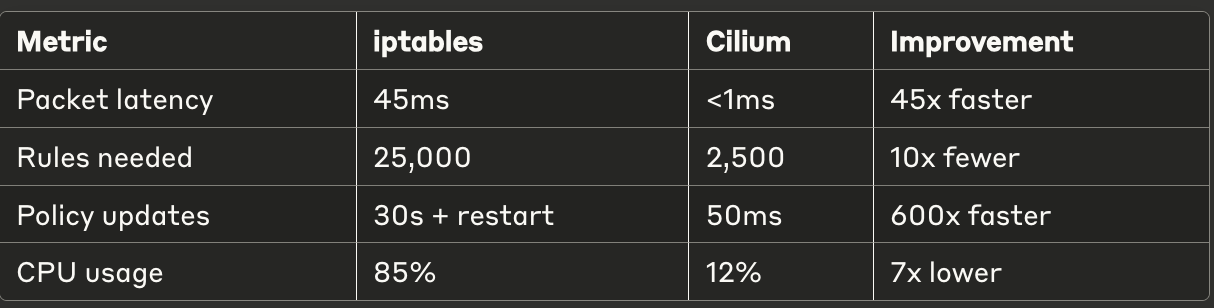

Your Kubernetes cluster has 500 pods. Your iptables has 15,000 rules. Each packet takes 45ms to process.

Meanwhile, an attacker just moved laterally between your microservices in 2ms.

The math is simple: slow security = no security.

Why iptables Doesn't Scale

# Check your current pain

iptables -L | wc -l

# Probably something like:

# 100 services = 2,000 rules

# 500 services = 25,000 rules

# 1000 services = cluster meltdown

The performance cliff:

More services = exponentially slower networking

Policy updates require pod restarts

No visibility into what's actually happening

Can't see application-layer attacks

Enter eBPF: Security at Kernel Speed

Think of eBPF as giving your kernel superpowers:

Instead of this (slow):

Packet → Userspace → 15,000 iptables rules → Decision

You get this (fast):

Packet → Kernel eBPF program → Instant decision

Real numbers:

iptables: 45ms per packet

eBPF: <1ms per packet

That's 45x faster

Cilium: eBPF Made Simple

Identity-Based Security

Old way (IP-based):

# Breaks when pods restart

spec:

podSelector:

matchLabels:

app: backend

ingress:

- from:

- podSelector:

matchLabels:

app: frontend

New way (Identity-based):

# Works regardless of IP changes

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: secure-api

spec:

endpointSelector:

matchLabels:

app: backend

ingress:

- fromEndpoints:

- matchLabels:

app: frontend

security.level: trusted

toPorts:

- ports:

- port: "8080"

rules:

http:

- method: "GET"

path: "/api/users/*"

Key difference: Cilium sees WHO is making the request, not just WHERE it's coming from.

Live Policy Updates (No Restarts!)

Traditional approach:

kubectl apply -f new-policy.yaml

kubectl rollout restart deployment/app # Downtime!

# Wait 5 minutes...

# Debug broken connections...

Cilium approach:

kubectl apply -f cilium-policy.yaml

# Done. Zero downtime. Policy active immediately.

Debugging with Hubble

Traditional debugging:

tcpdump -i any port 8080

# Stare at 10,000 packets

# Give up and restart everything

Hubble debugging:

hubble observe --follow

# See exactly what's happening:

frontend-pod → backend-pod http-request ALLOWED (GET /api/users)

malicious-pod → database-pod tcp-connect DENIED (Policy violation)

You can literally watch attacks being blocked in real-time.

Performance That Actually Matters

Getting Started This Week

1. Install Cilium (10 minutes)

helm repo add cilium https://helm.cilium.io/

helm install cilium cilium/cilium \

--set kubeProxyReplacement=strict \

--set hubble.relay.enabled=true

2. Create Your First Policy (5 minutes)

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: deny-all-default

spec:

endpointSelector: {}

# No rules = deny everything by default

3. Watch It Work (1 minute)

hubble observe --follow

# Make a request and watch it get blocked

The Simple Truth

eBPF + Cilium gives you:

Faster networking

Better security

Zero-downtime policy updates

Real-time attack visibility

Traditional iptables gives you:

Slower performance with more services

Blind spots in security

Downtime for policy changes

No idea what's actually happening

Your Action Plan

Today: Check how many iptables rules you have This week: Set up Cilium in a test cluster

Next month: Plan your production migration

The longer you wait, the slower and less secure your cluster becomes.