Kubernetes Affinities: Production Engineer's Guide

You know that sinking feeling when your pods are all crammed onto one node, or when your Redis cache is running three availability zones away from your web app? Yeah, that's an affinity problem.

This week, we're diving deep into Kubernetes scheduling constraints that actually matter in production. No theory – just the commands you'll need when things go sideways.

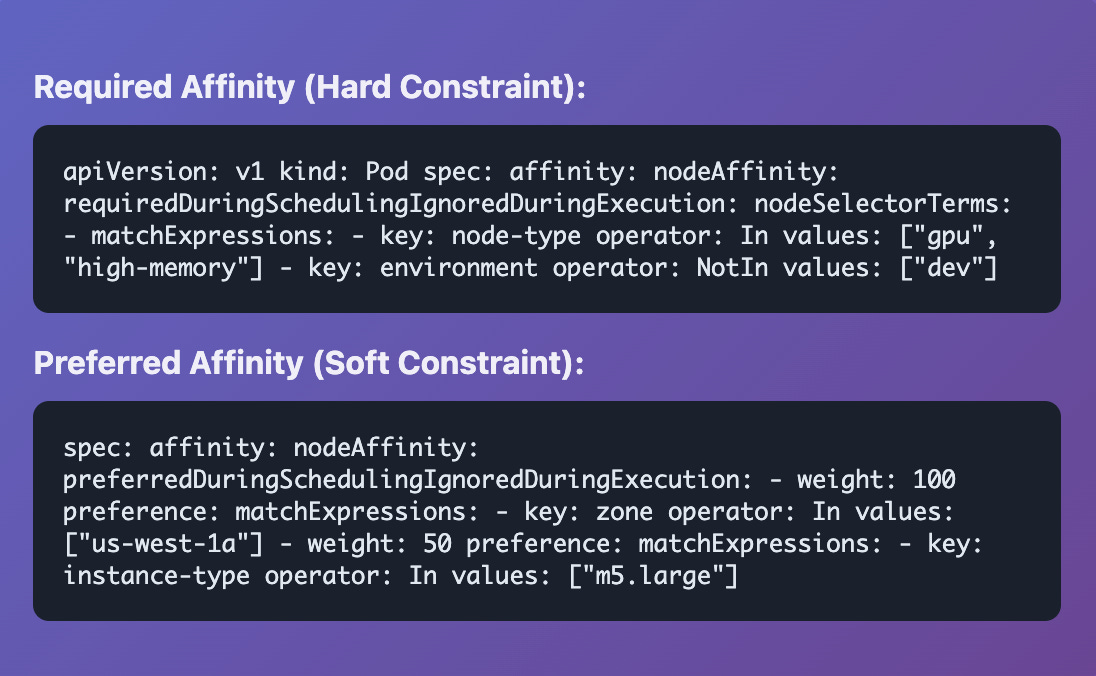

Node Affinity: Pod Preferences for Nodes

The Problem: Your GPU workload just got scheduled on a CPU-only node. Your high-memory database is running on the smallest instance in your cluster.

When You Need This:

Schedule GPU workloads only on GPU nodes

Keep production pods off development nodes

Ensure pods run in specific availability zones

Prefer high-memory nodes for memory-intensive apps

Controls which nodes your pods can be scheduled on based on node labels.

Think of it as "pod preferences for nodes."

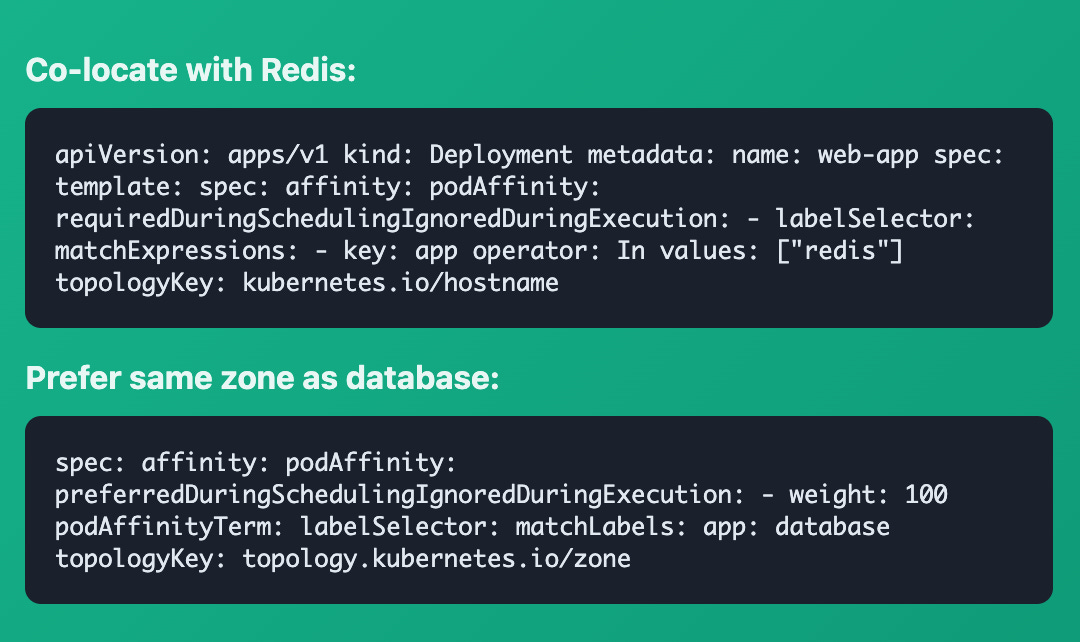

Pod Affinity

The Problem: All three replicas of your API server just went down because they were on the same node. Your database replicas are competing for the same disk I/O.

Pods → Pods (Together)

Attracts pods to run near other pods. Used to co-locate related workloads for performance or compliance.

Common Use Cases:

• Keep web servers near their Redis cache

• Co-locate microservices that communicate frequently

• Ensure data locality for performance

• Group services in the same availability zone

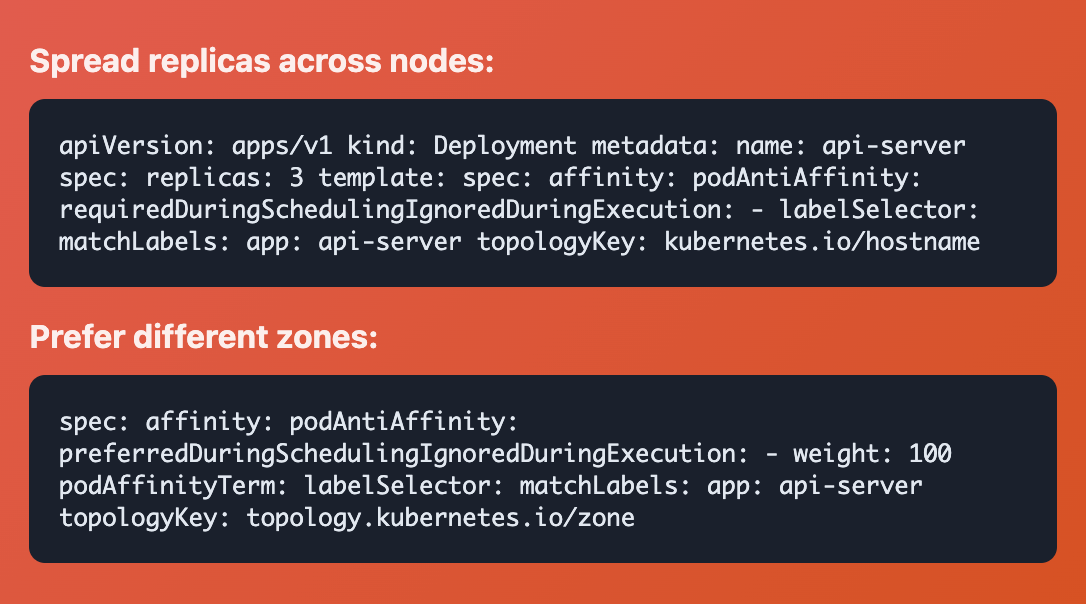

Pod Anti-Affinity

The Problem: All three replicas of your API server just went down because they were on the same node. Your database replicas are competing for the same disk I/O.

Pods → Pods (Apart)

Repels pods away from other pods. Critical for high availability and preventing single points of failure.

Common Use Cases:

• Spread replicas across different nodes

• Avoid resource contention between similar workloads

• Ensure high availability across zones

• Prevent noisy neighbor problems

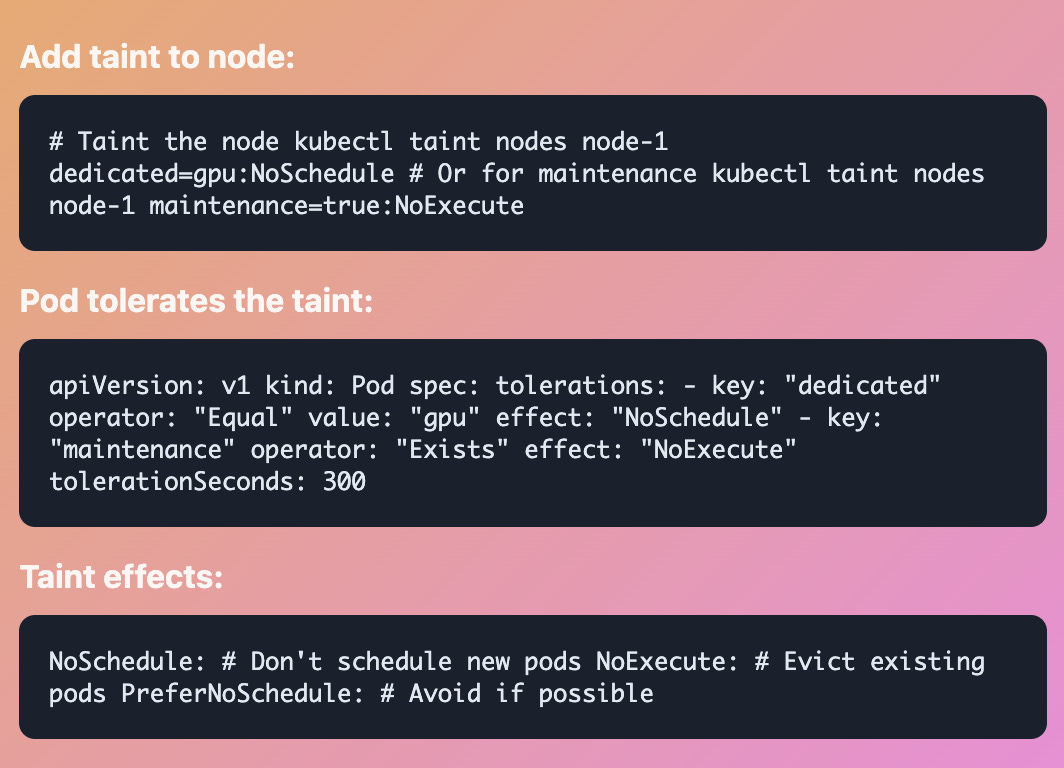

Taints & Tolerations

The Problem: Your expensive GPU nodes are running random workloads. You need to drain a node for maintenance but pods keep getting rescheduled there.

Node Defense System

Nodes repel pods by default (taints), but pods can declare tolerance. The opposite of affinity - exclusion by default.

Common Use Cases:

• Dedicate nodes for specific workloads

• Prevent scheduling during maintenance

• Isolate problematic or sensitive workloads

• Handle node failures gracefully

Debug Commands & Troubleshooting

Check Node Labels & Taints

kubectl get nodes --show-labels

kubectl describe node <node-name>

kubectl get nodes -o json | jq '.items[].spec.taints'Check Pod Placement

kubectl get pods -o wide

kubectl describe pod <pod-name>

kubectl get events --field-selector reason=FailedSchedulingAnalyze Scheduling Issues

kubectl describe pod <pending-pod>

kubectl logs -n kube-system kube-scheduler

kubectl get events --sort-by='.lastTimestamp'Check Resource Availability

kubectl top nodes

kubectl describe nodes | grep -A5 "Allocated resources"

kubectl get pods --all-namespaces -o wideWhen to Use Which Affinity Type?

Need to control which NODES pods run on?

→ Use Node Affinity (GPU nodes, zones, instance types)

Need pods to run TOGETHER for performance?

→ Use Pod Affinity (cache with app, microservices)

Need pods to run APART for availability?

→ Use Pod Anti-Affinity (spread replicas, avoid conflicts)

Need to RESERVE nodes or EXCLUDE pods by default?

→ Use Taints & Tolerations (dedicated nodes, maintenance)